Conservation

Can We Learn To Talk With Whales? Introducing Project CETI

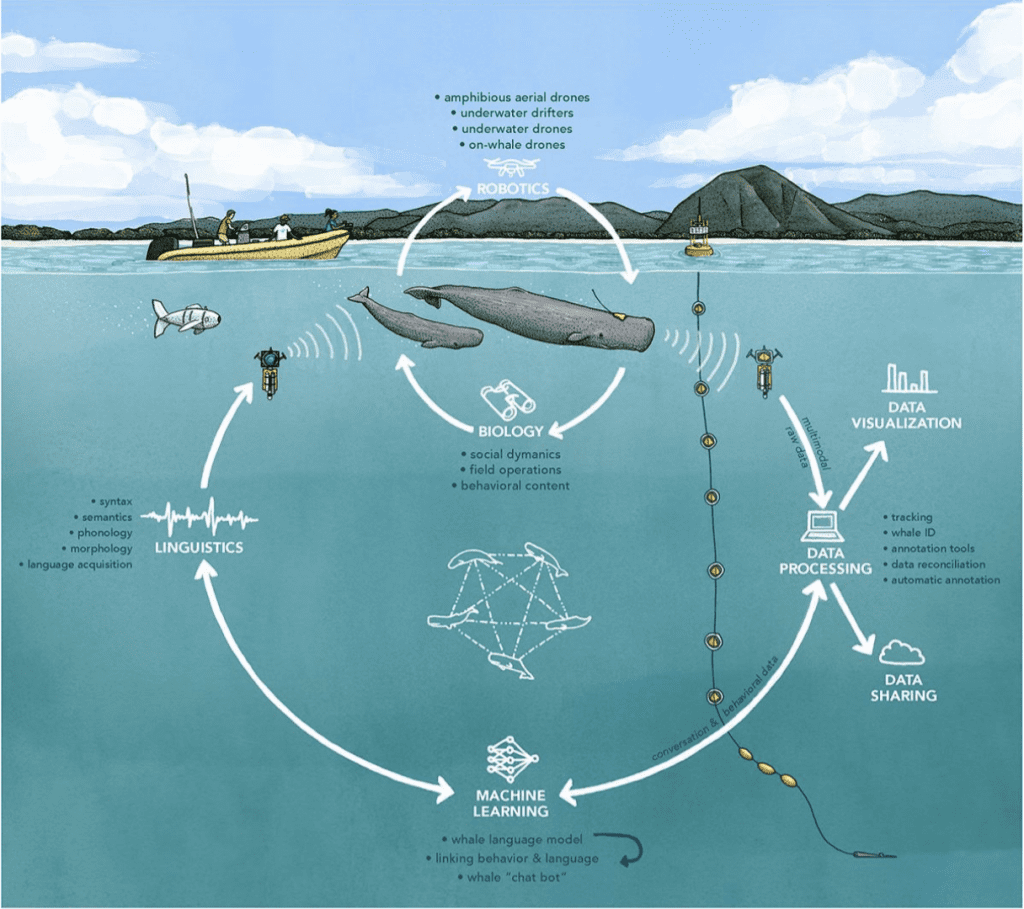

Inspired by “search for extraterrestrial intelligence” or SETI, project leader Dr. David Gruber and an eclectic band of scientists and researchers seek to decipher the language of sperm whales, which might be described as enigmatic aliens living in our midst. To do this, they are applying the latest technology including AI, cryptography, machine learning, and robotics.

Header image: Sperm whales socializing. Photo by Brian J. Skerry

Companion story: Exploring Whale Culture: An Interview With NatGeo Photojournalist Brian Skerry

Project CETI (Cetacean Translation Initiative), a non-profit organization, with the help of the 2020 TED Audacious Project, is applying advanced machine learning and gentle robotics to decipher the communication of the world’s most enigmatic ocean species: the sperm whale. In interpreting their voices and hopefully communicating back, we aim to show that today’s most cutting-edge technologies can be used to benefit not only humankind, but other species on this planet. By enabling humans to deeply understand and protect the life around us, we thereby redefine our very understanding of the word “we.”

As with the Earthrise photo from Project Apollo, CETI’s discoveries and progress have the potential to significantly reshape humanity’s understanding of its place on this planet. By regularly sharing our findings with the public—through partners like the National Geographic Society—CETI will generate a deeper wonder for Earth’s matrix of life on earth, and provide a uniquely strong boost to the new phase of broader environmental movement.

Founded and led by scientists, CETI has brought together leading cryptographers, roboticists, linguists, AI experts, technologists and marine biologists to:

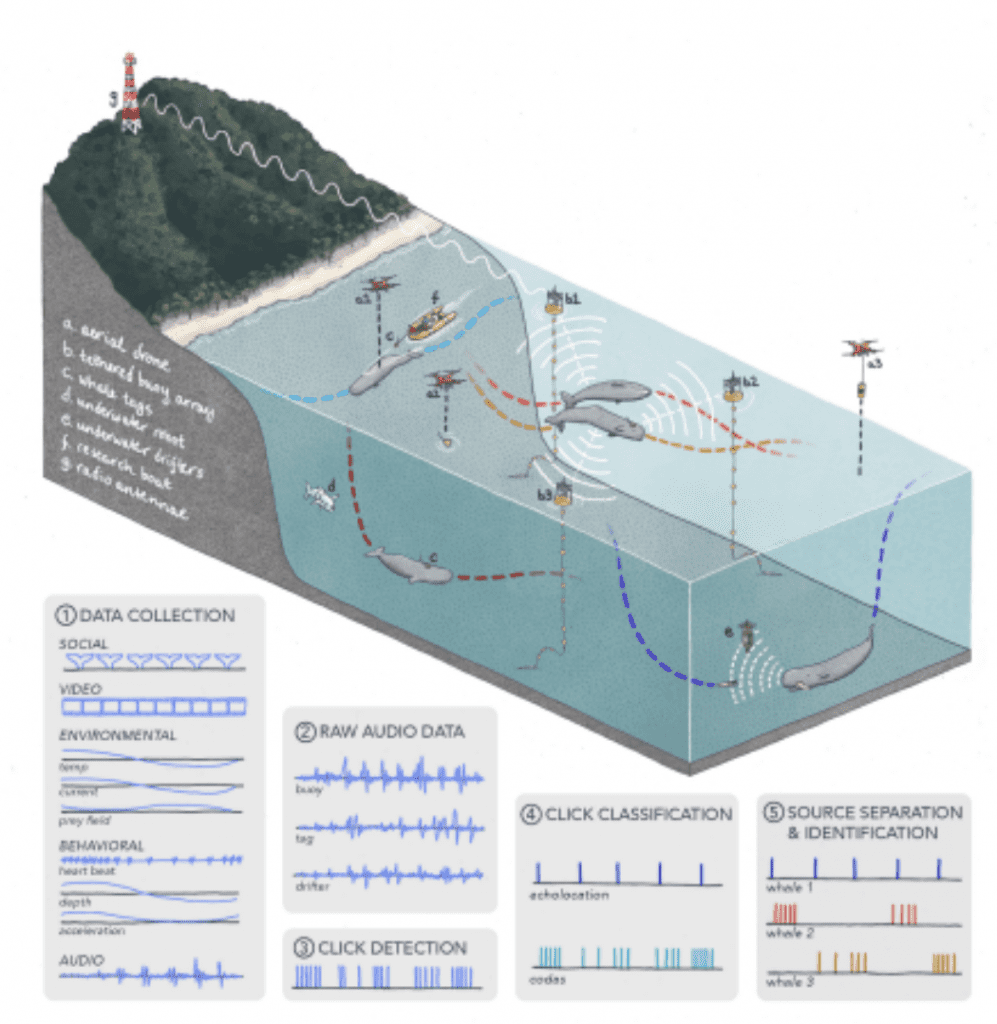

● Develop the most delicate robotics technologies, including partnership with National Geographic Society’s Exploration Technology Lab to listen to whales and put their sounds into context.

● Deploy a “Core Whale Listening System,” a novel hydrophone array to study a population of whales in a 20×20 kilometer field site.

● Build on substantial data on the whales’ sounds, social lives, and behavior already obtained by the Dominica Sperm Whale Project.

● Create a bespoke, big data pipeline to examine the recorded data and decode it using advanced machine learning, natural language processing and data science.

● Launch a public interface, data visualization, communications platform and leadership initiative in collaboration with key partners to engage and foster the global community.

WHY SPERM WHALES?

Sperm whales have the largest brains of any species and share traits strikingly similar to humans. They have higher-level functions such as conscious thought and future planning, as well as speech and feelings of compassion, love, suffering and intuition. They live in matriarchal and multicultural societies and have dialects and strong multigenerational family bonds. Modern whales have been great stewards of the ocean environment for more than 30 million years, having been here for five times longer than the earliest hominids. Our understanding of these animals is just beginning.

WHY NOW?

In the late 1960s, scientists, including principal CETI advisor Dr. Roger Payne, discovered that whales sing to one another. His recordings, Songs of the Humpback Whale, sparked the “Save the Whales” movement, one of the most successful conservation initiatives in history. The campaign eventually led to the Marine Mammal Protection Act that marked the end of large-scale whaling and saved several whale populations from extinction.

All this by just hearing the sounds of whales. Imagine what would happen if we could understand them and communicate back. For the first time in history, advances in engineering, artificial intelligence and linguistics have made it possible to understand the communication of whales and other animals more substantially. Our species is at a critical juncture, one where we can work together with the help of compassionate technologies to build a brighter, more connective and equitable future. CETI also hopes to provide a blueprint for future ambitious, collaborative initiatives that can help us on this journey.

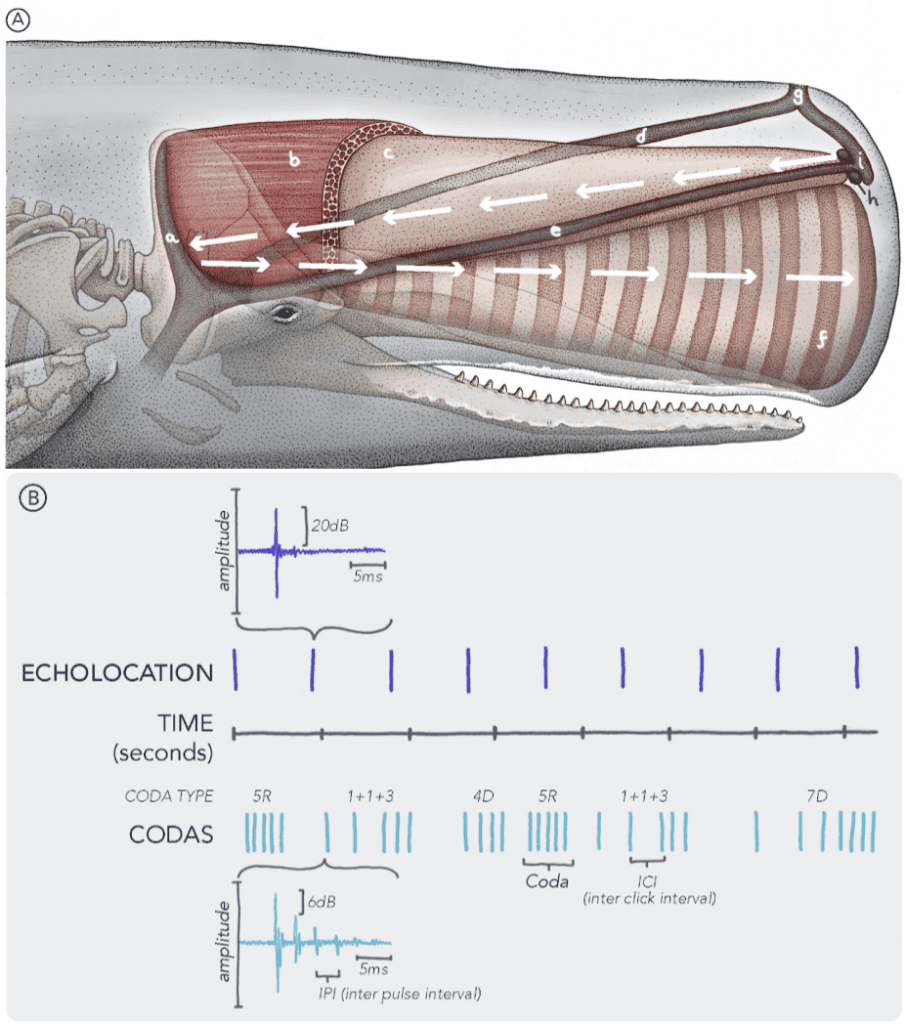

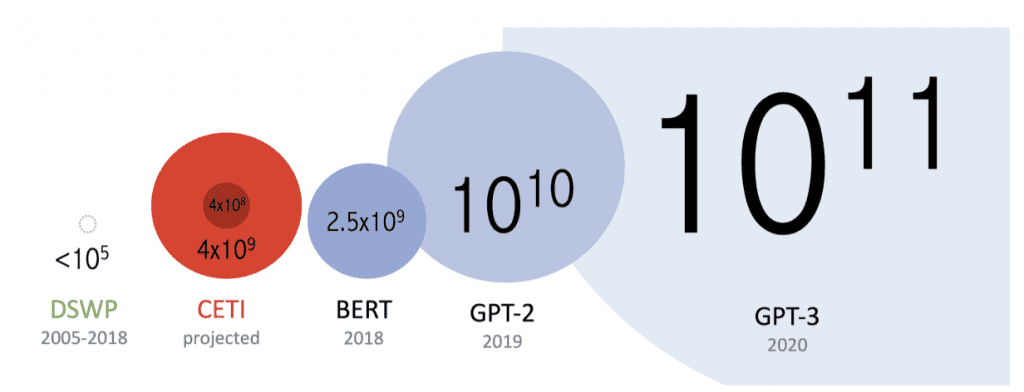

red is the estimated size of a new dataset planned to be collected in Dominica by Project CETI, an interdisciplinary initiative for cetacean communication interpretation. The estimate is based on the assumption of nearly continuous monitoring of 50-400 whales. The estimate assumes 75-80% of their vocalizations constituting echolocation clicks, and 20-25% being coda clicks. A typical Caribbean whale coda has 5 clicks and lasts 4 sec (including a silence between two subsequent codas), yielding a rate of 1.25 clicks/sec. Overall, we estimate it would be possible to collect between 400M and 4B clicks per year as a longitudinal and continuous recording of bioacoustic signals as well as detailed behavior and environmental data.

Dive Deeper:

Meet The Project CETI Team

Cornell University: Cetacean Translation Initiative: a roadmap to deciphering the communication of sperm whales by the current scientific members of Project CETI collaboration. April 2021

Harvard School of Engineering: Talking with whales

Project aims to translate sperm whale calls April 2021

National Geographic: Groundbreaking effort launched to decode whale language. With artificial intelligence and painstaking study of sperm whales, scientists hope to understand what these aliens of the deep are talking about. April 2021

National Geographic: David Gruber: Researching with respect and a gentler touch—National Geographic Explorer David Gruber and his team are taking a delicate approach to understanding sperm whales. March 2021

TED Audacious: What if we could communicate with another species? SEP 2020

Simons Institute: Sperm Whale Communication: What we know so far/ Understanding Whale Communication: First steps AUG 2020 with David Gruber