Latest Features

Capturing 3D Audio Underwater

Listen up divers! Audio engineer turned techie, Symeon Manias is bringing 3D audio to the underwater world to provide a more immersive and convincing auditory experience to listeners whether its video, film, or virtual reality. So, pump up the volume—here’s what you need to know.

by Symeon Manias

Header image by Peter Gaertner/Symeon Manias and edited by Amanda White

Spatial hearing provides sound related information about the world. Where does a sound come from? How far away is it? In what space does the sound reside? Humans are pretty good at localizing sound sources and making sense of the world of sounds. The human auditory system performs localization tasks either by solely relying on auditory cues or by fusing them with visual, dynamic, or experience-based cues. Auditory cues consist of cues obtained by one ear, which is termed monaural, or by using both ears, termed binaural.

Humans can detect sounds of frequency content between 20 Hz and 20 kHz. That translates to wavelengths between 1.7 cm and 17 m. This frequency range decreases with age as the internal parts of the ear degrade; however, exposure to loud noises can accelerate this degradation. The peripheral part of each of the human ears, which by no coincidence is common to most mammals, consists of three structures. The external part of the ear, the middle ear, and the inner ear. Figure 1 shows in detail all these parts of the ear along with their basic structure

Courtesy of Evangelos Pantazis.

The external ear consists mainly of the pinna, the concha, and the ear canal and ends at the eardrum. The pinna and concha, which are visible as the outer part of the ear, are responsible for modifying the frequency spectrum of sounds coming from different directions. The ear canal, on the other hand, is responsible for enhancing specific frequencies of most incoming sounds. The ear canal can be seen as a resonant tube with a resonant frequency that depends on the length and diameter of the tube. For most humans, the resonant frequency of the ear canal is between 2 kHz and 4 kHz. This means that all sounds that travel through the ear canal will be enhanced in that frequency range. Airborne sounds travel from the world through the pinna, the concha, the ear canal, and hit the eardrum, which is at the end of the ear canal. The eardrum separates the outer from the middle ear and acts as a transformer that converts sounds to mechanical vibration.

On the other side of the eardrum, in the middle ear, there are three ossicles, the malleus, the incus and the stapes. These bones are responsible for transmitting the sounds from the outer ear through vibration to the entrance of the inner ear at the oval window. This is where mechanical vibration is transformed into sound to then be received by the sound receptors in the middle ear.

One might wonder why we even need the middle ear if the incoming sound is first transformed at the eardrum into mechanical vibration and then back to sound at the oval window. If the middle ear was absent, it is very likely that incoming sound would be reflected back at the eardrum. Another reason is that the middle ear generally acts as a dynamics processor; it has a protection functionality. When a loud sound travels through the ear canal and hits the eardrum, the muscles that are attached to the middle ear ossicles can attenuate the mechanical vibration. This is a phenomenon known in psychoacoustics as an acoustics reflex. The middle ear also serves as a means to reduce the transmission of internally generated sounds that are transmitted through bone conduction, as well as letting air be transmitted on the back side of the eardrum through the Eustachian tube, which balances the pressure that is building on the eardrum.

The inner ear is a very complex structure which is responsible for two functions: one is the vestibular system, which is responsible for our orientation and balance in the three-dimensional world, and the other is the cochlea, which is dedicated to sound perception. The cochlea, which looks like a hard shell, is essentially a hard bone filled with fluid and consisting of rigid walls which continue like a spiral with decreasing diameter. Sounds enter the cochlea through the oval window and cause the liquid inside the cochlea to begin to ripple, and that’s where the magic happens.

There are two membranes inside the cochlea, the vestibular membrane, which separates the cochlear duct from the vestibular duct, and the basilar membrane. The basilar membrane is where the organ of Corti resides; this area consists of two sets of sensory receptors, named hair cells, and these are the inner and outer cells. The inner hair cells, which move as the incoming sound waves travel through the cochlear fluid, act as transducers that transform the motion into neural spike activity that is then sent to the auditory nerve.

The outer hair cells can influence the overall sensitivity of each perceived sound. The neural spikes that travel through the auditory nerve are then received by the brain and are interpreted as sounds that we know and can understand. Different sections of the basilar membrane inside the cochlea are responsible for interpreting different frequencies of sound. The section near the entrance consists of hair cells that are responsible for the interpretation of high pitched sounds and, as we move further inside the cochlea, detect progressively lower pitched sounds.

Sound Source Localization

A single ear is basically a frequency analyzer that can provide information to the brain about the pitch of a sound with some dynamics processing that can protect us from loud sounds and some basic filtering of a sound that comes from different directions. So what can we do with two ears that it is almost impossible to do with one? Two ears can provide information about another dimension—the direction—and consequently can give us an estimate of the location of a sound. This is termed spatial hearing. The main theories about how spatial hearing works developed around the idea of how we localize sounds with two ears and get information about the environment in which they reside.

The human auditory system deploys an assortment of localization cues to determine the location of a sound event. The underlying human localization theory is that the main auditory cues that assist with localization are the time and level difference between the two ears and the shape of the ear itself. The most prominent auditory cues use both ears for determining the direction of a sound source and are called binaural cues. These cues measure the time difference of a sound arriving in the two ears, named as interaural time difference (ITD), and cues that track the level difference between the two ears, named as interaural intensity difference (IID). Sound arrives at different times between the two ears and the time delay can be up to approximately 0.7 milliseconds (ms). ITD is meaningful for frequencies up to approximately 1.5 kHz, while ILD provides meaning for frequencies above 1.5 kHz. See Figure 2 for a visual explanation of the ITD and ILD.

ITD and IID alone cannot adequately describe the sound localization process. Imagine a sound coming from directly in front vs the same sound coming directly from the back. ITD and IID provide the exact same values for both ears since their distance from the sound is exactly the same. This is true for all sounds that are on the median plane, which is the plane defined from the points directly in front of us, then up and then back. This is where monaural cues play a role. These are generated by the pinna, diffraction, and reflections of our torso and head. The frequencies of sounds coming from our back or on top of our head will be attenuated differently than sounds coming from front. Another set of cues are dynamic cues which are generated by moving our head. These can show the effect of our head movement to ITD and IID. Last but not least, visual and experience-based cues play an important role as well. Our visual cues, when available, fuse information with auditory cues and resolve to more accurate source localization.

Localizing Sound Underwater

If, under certain conditions, humans can localize a sound source with accuracy as high as 1 degree in the horizontal plane, what makes it so difficult to understand where sounds are coming from when we are underwater? The story of sound perception becomes very different underwater. When we are submerged the localization abilities of the auditory systems degrade significantly. The factor that has the biggest impact when we enter the water is the speed of sound. The speed of sound in air is approximately 343 m/s, while underwater it is 1480 m/s and depends mainly on temperature, salinity etc. A speed of sound that is more than 4x higher than the speed of sound in air will result in sound arriving in our ear with much smaller time differences, which could possibly diminish or even eliminate completely our spatial hearing abilities.

The perception of time differences is not the only cue that is affected; the perception of interaural intensity differences is also affected by the fact that there is very little mechanical impedance mismatch between water and our head. In air, our head acts as an acoustic barrier that attenuates high frequencies due to shadowing effect. Underwater sound most likely travels directly through our head since the impedances of water and our head are very similar. and the head is acoustically transparent. These factors might suggest that underwater, humans could be considered as a one-eared mammal.

In principle, the auditory system performs very poorly underwater. On the other hand, there have been studies and early reports by divers that suggest that some primitive type of localization can be achieved underwater. In a very limited study that aimed to determine the minimum audible angle, divers were instructed to perform a left-right discrimination and were able to improve their ability when they were given feedback on their performance.

Another study was conducted where various underwater projectors were placed at 0, +/-45 and +/-90 degrees at ear level in the horizontal plane with 0 degrees being in front of the diver in [5]. The stimuli consisted of pure tones and noise. The results indicated that divers could perform enough localization that it could not be written off as coincidence or chance. It is not entirely clear whether the underwater localization ability is something that humans can learn and adapt or if it’s a limitation of the human auditory system itself.

Assistive Technologies for Sound Recording

In a perfect world, humans could perform localization tasks underwater as accurately as they could in air. Until that day, should it ever come, we can use assistive technologies to sense the underwater sound environment. Assistive technologies can be exploited for two main applications of 3D audio underwater: localization of sound and sound recording. Sound sensing with microphones is a well developed topic, especially nowadays since the cost of building devices with multiple microphones has decreased dramatically. Multi-microphone devices can be found everywhere in our daily life from portable devices to cars. These devices use multiple microphones to make sense of the world and deliver to the user improved sensing abilities but also the ability to capture the sounds with great accuracy.

The aim of 3D audio is to provide an immersive, appealing, and convincing auditory experience to a listener. This can be achieved by delivering the appropriate spatial cues using a reproduction system that consists of either headphones or loudspeakers. There are quite a few techniques regarding 3D audio localization and 3D audio recording that one can exploit for an immersive experience. Traditionally, recording relies on a small number of sensors that are either played back directly to the loudspeaker or are mixed linearly and then fed to loudspeakers. The main idea here is that if we can approximate the binaural cues, we can trick the human auditory system into thinking that sounds come from specific directions and give the impression of being there.

One of the earliest approaches in the microphone domain was a stereophonic technique invented by Blumlein in Bell Labs [6]. Although this technique was invented in the beginning of the last century, it took many decades to actually commercialize it. From there on, many different stereophonic techniques were utilized that aimed at approximating the desired time and level differences to be delivered to the listener. This was performed by changing the distance between the sensors or by placing directional sensors really close together, and delivering the directional cues by exploiting the directivity of the sensors. The transition from stereophonic to surround systems came right after by using multiple sensors for capturing sounds and multiple loudspeakers for playback. These are, for example, the traditional microphone arrangements we can see on top of an orchestra playing in a hall.

A more advanced technology that existed since the 1970s is called Ambisonics. It consists of a unified generalized approach to the recording, analysis, and reproduction of any recorded sound field. It utilized a standard microphone recording setup with four sensors placed almost in a coincident manner on the tetrahedral arrangement. This method provided great flexibility in terms of playback: once the audio signals are recorded they can be played back in any type of output setup, such as a stereophonic pair of loudspeakers, a surround setup, or plain headphones. The mathematical background of this technique is based on solving the acoustic wave equation, and for a non-expert this can become an inconvenience to use in practice.

Fortunately, there are tools that make this process straightforward for the non-expert. In practical terms, this technology is based on recording signals from various sensors and then combining them to obtain a new set of audio signals, called ambisonic signals. The requirement for acquiring optimal ambisonic signals is to record the signals with sensors placed on the surface of a sphere for practical and theoretical convenience. The process is based on sampling the sound field pressure over a surface or volume around the origin. The accuracy of the sampling depends on the array geometry, the number of sensors, and the distance between the sensors. The encoded ambisonic signals can then accurately describe the sound field.

By using ambisonic signals, one can approximate the pressure and particle velocity of the sound field at the point where the sensors are located. By estimating these two quantities we can then perform an energetic analysis of the sound field and estimate the direction of a sound by using the active intensity vector. The active intensity vector points to the direction of the energy flow in a sound field. The opposite of that direction will point exactly where the energy is coming from, therefore it will show the direction of arrival. A generic view of such a system is shown in Figure 3. For more technical details on how an three-dimensional sound processor works the reader is referred to [3,7].

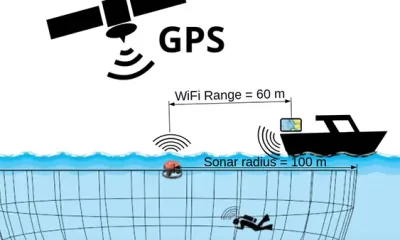

For underwater audio-related applications, a similar sensor is used, namely the hydrophone. The signal processing principles that can be used underwater are very similar to the ones used with microphones. However, the applications are not as widely spread. Multiple hydrophones, namely hydrophone arrays, have been utilized for localization tasks, but many of them are based on classical theories that require the hydrophones to be very far apart from each other. Therefore, compact, wearable devices are uncommon. A compact hydrophone array that can be carried by a single diver can serve multiple purposes: it can operate as a localization device that can locate quite accurately where sounds are originating from, and it can also capture the sounds with spatial accuracy that can later be played back and give the experience of being there. This can be especially useful for people that do not have the chance to experience the underwater world at all or listen to the sounds of highly inaccessible places where diving is only for highly-skilled technical divers.

A purposefully built compact hydrophone array which can be operated by a diver and that can perform simultaneously a localization task but also record spatial audio that can be later played through a pair of headphones, is shown in a recently published article as a proof of concept. It is suggested that a simple set of four hydrophone sensors can be exploited in real-time to identify the direction of underwater sound objects with relatively high accuracy, both visually and aurally.

Underwater sound localization is not a new technology, but the ability to do such tasks with compact hydrophone arrays where the hydrophones are closely spaced together has the potential for many applications. The hydrophone array used in the study discussed above, was an open-body hydrophone array, consisting of four sensors placed at the vertices of a tetrahedral frame. A photo of the hydrophone array is shown in Figure 4. More technical details on the hydrophone array can be found in the following publication [2]. The hydrophone array was used to provide a visualization of the sound field. The sound-field visualization is basically a sound activity map where the relative energy for many directions is depicted using a color gradient like in Figure 5. These experiments were performed in a diving pool.

The depth of the hydrophone array was fixed by attaching it to the end of a metal rod, which was made out of a three-piece aluminum tube. The hydrophone array signals were recorded and processed in real time with a set of virtual instrument plugins. The observer, a single action camera in this case, was able to clearly track the movements of the diver from the perspective of the array, both visually and aurally. The diver from the perspective of the array and the corresponding activity map are both shown in Figure 5. A video demonstrating the tracking and headphone rendering with a diver inside the swimming pool can be found here.

This example has been utilized as a proof of concept that compact arrays can be indeed exploited by divers simultaneously for sound visualization and accurate three-dimensional sound recording. In the examples shown, the sound arrives at the hydrophone array and then the user can visually see the location of sound and simultaneously listen to the acoustic field. The three-dimensional audio cues are delivered accurately and the sound sources are represented to the user in their correct spatial location so the user can accurately localize them.

3D Video Recording

The current state of the art in 3D video recording underwater usually captures the audio with the microphones built into the cameras. Although sounds are often recorded in this way, the recorded sound through built-in microphones inside a casing cannot capture underwater sounds accurately. The development of a relatively compact hydrophone array such as the one demonstrated could potentially enhance the audio-visual experience by providing accurate audio rendering of the recorded sound scene. Visuals and specific 3D video recordings can be pretty impressive by themselves, but the addition of an accompanying 3D audio component can potentially enhance the experience.

Visuals and specific 3D video recordings can be pretty impressive by themselves, but the addition of an accompanying 3D audio component can potentially enhance the experience.

Ambisonic technologies are a great candidate for such an application since the underwater sound field can be easily recorded with an ambisonic hydrophone array (such as the one shown in Figure 4) and can be mounted near or within the 3D camera system. Ambisonics aim at a complete reconstruction of the physical sound scene. Once the sound scene is recorded and transformed in the ambisonic format, a series of additional spatial transformations/manipulation can come handy. Ambisonics allow straightforward manipulation of the recorded sound scene, such as a rotation of the whole sound field to arbitrary directions, mirroring of the sound field to any axis, direction-dependent manipulation such as focusing on different directions or areas, or even warping of the whole sound field. The quality of the reproduced sound scene and accuracy of all the sound scene manipulation techniques highly depends on the number of sensors involved in the recording.

Some modern, state-of-the art techniques are based on the same recording principle, but they can provide an enhanced audio experience by using a low number of sensors that exploit human hearing abilities as well as advanced statistical signal processing techniques. These techniques aim to imitate the way human hearing works, and by processing the sounds in time, frequency, and space, they deliver the necessary spatial cues for an engaging aural experience, which is usually perceived as very close to reality. They exploit the limitations of the human auditory system and analyze only the properties of the sound scene that are meaningful to a human listener. Once the sound scene is recorded and analyzed into the frequency content of different sounds, it can then be manipulated and synthesized for playback [7].

Dive Deeper

- [1] Delikaris Manias, Symeon, Leo McCormack, Ilkka Huhtakallio, and Ville Pulkki. “Real-time underwater spatial audio: a feasibility study.” Audio Engineering Society, 2018.

- [2] Spatial Audio Real-Time Applications (SPARTA).

- [3] Gerzon, Michael A. “Periphony: With-height sound reproduction.” Journal of the audio engineering society, 1973.

- [4] Feinstein, Stephen H. “Acuity of the human sound localization response underwater.” The Journal of the Acoustical Society of America 1973.

- [5] Hollien, Harry. “Underwater sound localization in humans.” The Journal of the Acoustical Society of America, 1973

- [6] A. D. Blumlein. British patent specification 394,325 (improvements in and relating to sound-transmission, sound-recording and sound-reproducing systems). Journal of the Audio Engineering Society, 6(2):91–130, 1958.

- [7] Pulkki, Ville, Delikaris Manias Symeon, and Politis Archontis. Parametric time-frequency domain spatial audio , John Wiley & Sons, 2018.

Dr. Symeon Manias has worked in the field of spatial audio since 2008. He has received awards from the Audio Engineering Society, the Nokia Foundation, the Foundation of Aalto Science and Technology, and the International College of the European University of Brittany. He is an editor and author of the first parametric spatial audio book from Wiley and IEEE Press. Manias can be usually found diving through the kelp forests of Southern California.

Contact: symewn@gmail.com